In deep learning, the ReduceLROnPlateau is a learning rate scheduling strategy that reduces the learning rate when a metric, typically when the validation loss, has stopped improving.

And Why is that Important ? ?

The learning rate is a crucial hyperparameter in training deep learning models. A well-chosen learning rate can significantly impact the speed of convergence and the final performance of the model.

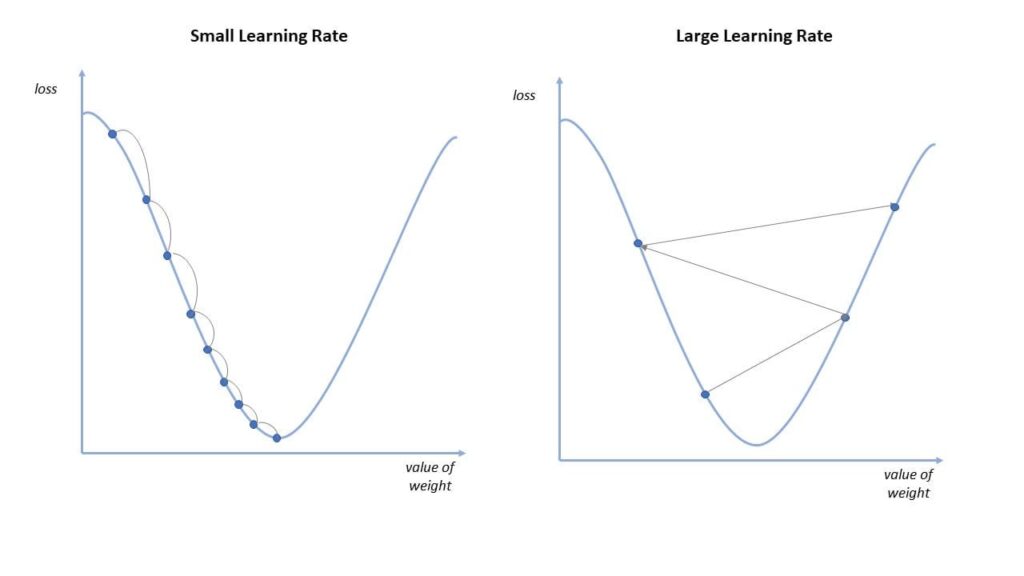

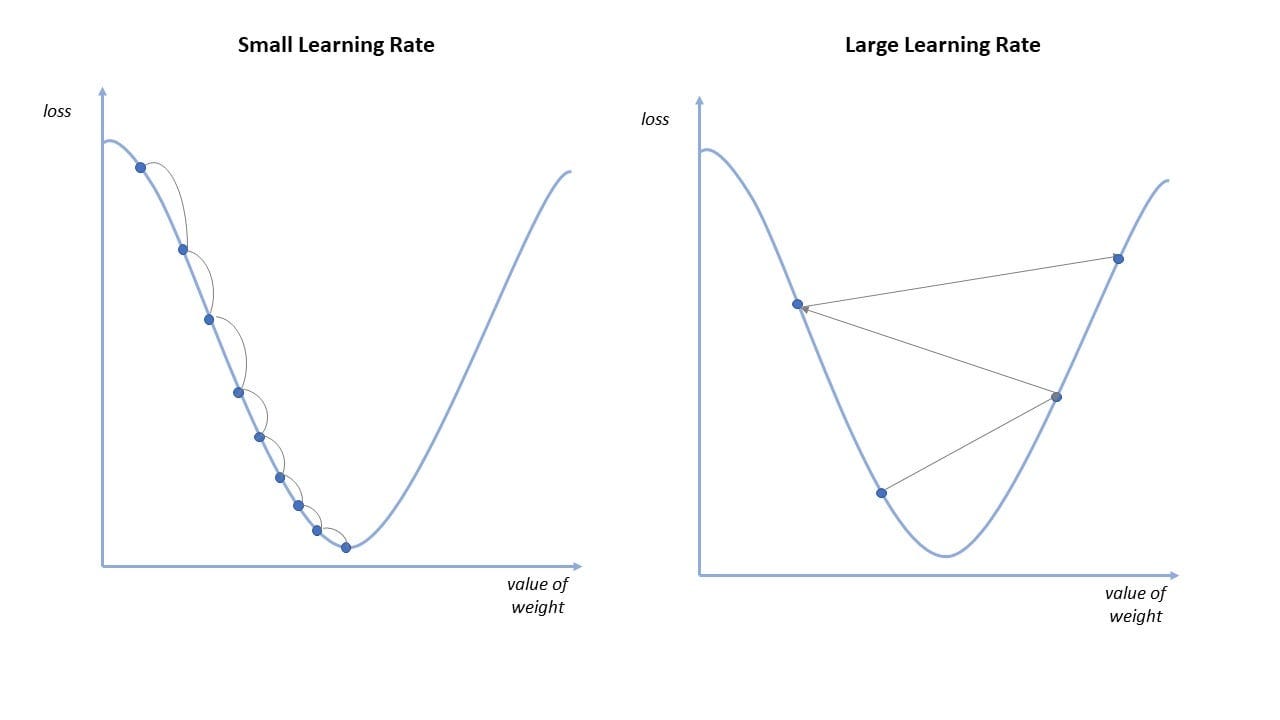

If the learning rate is too high, the model may fail to converge or converge to a suboptimal solution.

If it is too low, the training process can be extremely slow and may get stuck in local minima.

How ReduceLROnPlateau Works

ReduceLROnPlateau monitors a specific metric, usually the validation loss, during training. When this metric stops improving for a certain number of epochs (patience), the learning rate is reduced by a factor (typically by multiplying the current learning rate by a factor such as 0.1).

This reduction helps the model to make finer adjustments to the weights, allowing it to escape plateaus and potentially find better minima.

Key Metrics

• Monitor: This is the metric to be monitored (e.g., validation loss).

• Factor: The factor by which the learning rate will be reduced (e.g., 0.1).

• Patience: Number of epochs with no improvement after which the learning rate will be reduced.

• Min_lr: A lower bound on the learning rate to prevent it from becoming too small.

Here is an example of using ReduceLROnPlateau in Keras:

from keras.callbacks import ReduceLROnPlateau

reduce_lr = ReduceLROnPlateau(monitor=’val_loss’, factor=0.1, patience=10, min_lr=0.00001, verbose=1)

model.fit(X_train, y_train, validation_data=(X_val, y_val), epochs=100, callbacks=[reduce_lr])

Advantages of ReduceLROnPlateau

Efficient Training: Maximizes the use of higher learning rates when beneficial, expediting the training process.

Enhanced Performance: Allows for finer weight adjustments after hitting a plateau, leading to better model performance.

Automatic Adaptation: Adjusts seamlessly to various datasets and model architectures, reducing the need for manual tuning.

Conclusion

ReduceLROnPlateau is a valuable tool in deep learning for managing the learning rate dynamically based on the model’s performance. It helps avoid common pitfalls associated with fixed learning rates. Properly tuning its parameters can lead to faster convergence and better results.Tuning its parameters correctly can be the difference between a model that just works and one that excels.