AdaBoost, short for Adaptive Boosting, is an ensemble learning method designed to improve the accuracy of classifiers. It was introduced by Yoav Freund and Robert Schapire in 1996. Here’s a detailed explanation of how AdaBoost works and its key concepts:

Basic Idea

AdaBoost combines multiple weak classifiers to create a strong classifier. A weak classifier is one that performs slightly better than random guessing. By combining many of these weak classifiers, AdaBoost creates a final model that has significantly better performance.

How It Works

Initialization:

- Start with a training dataset consisting of 𝑛n samples: (𝑥1,𝑦1),(𝑥2,𝑦2),…,(𝑥𝑛,𝑦𝑛) where 𝑥𝑖 represents the features and 𝑦𝑖 represents the class labels.

- Initialize weights for each sample 𝑤𝑖. Initially, each sample is given equal weight, 𝑤𝑖=1/𝑛 .

Training:

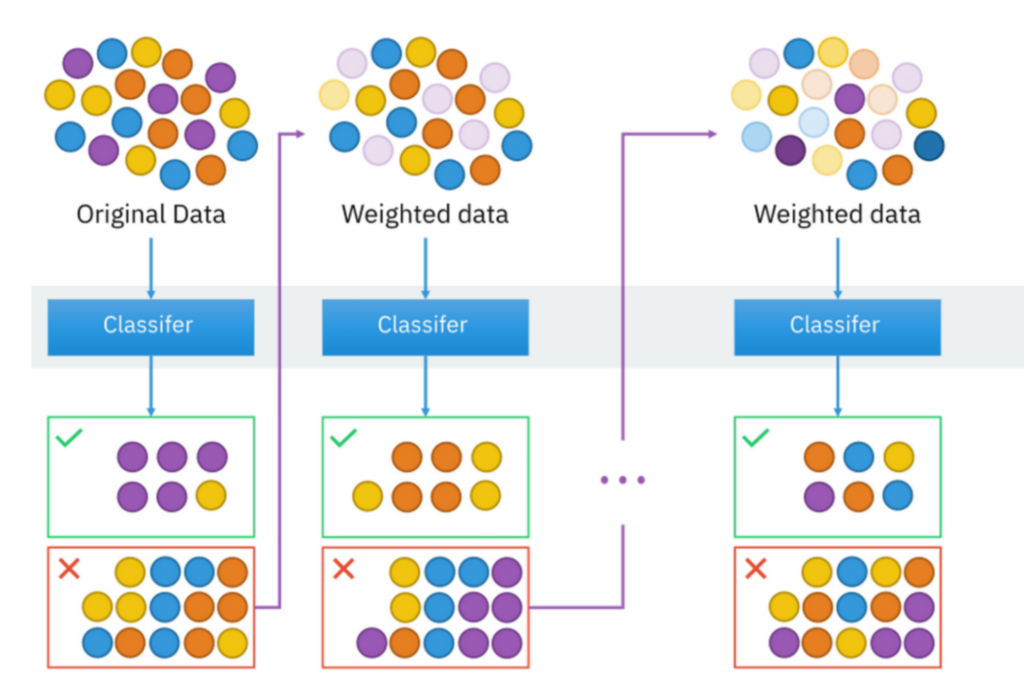

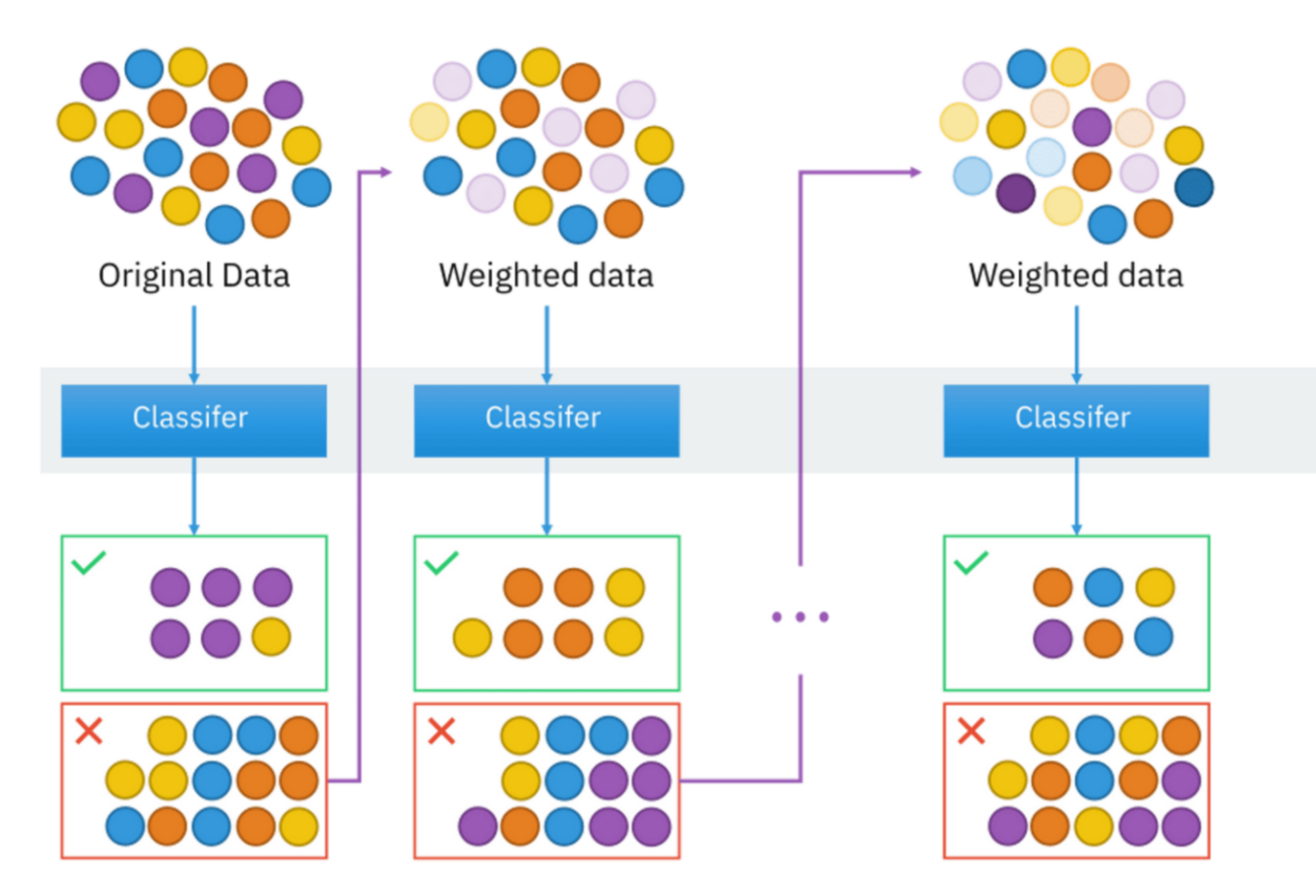

- For 𝑀 iterations (where 𝑀 is the number of weak classifiers to be combined):

- Train a Weak Classifier: Train a weak classifier ℎ𝑚(𝑥) using the weighted training data.

- Calculate Error: Compute the weighted error 𝜖𝑚 of the weak classifier ℎ𝑚.This weight reflects the classifier’s accuracy; a lower error results in a higher weight.

- Update Weights:Update the weights of the training samples: Increase the weights of the misclassified samples.Decrease the weights of the correctly classified samples.

- Normalization:Normalize all the weights so that they sum to 1.

- Final Model:The final strong classifier is a weighted combination of the weak classifiers.Typically, the model with better weights have an extra influence on the final choice.(Combining the weak classifiers using a weighted majority vote.)

Key Points

- Adaptive Weighting: The key feature of AdaBoost is the adaptive weighting of the training samples. It focuses more on the difficult-to-classify samples by giving them higher weights in subsequent iterations.

- Combining Weak Classifiers: AdaBoost’s ability to combine many weak classifiers (such as decision stumps) into a strong classifier is one of its main strengths.

- Minimizing Error: By iteratively updating the weights and focusing on the errors made in previous rounds, AdaBoost effectively reduces the overall error rate.

Example Scenario

Suppose you have a dataset to classify emails as spam or not spam. Using AdaBoost, you start by initializing weights equally among all training samples. You then train a weak classifier, such as a decision stump (a one-level decision tree).

After evaluating its performance, you adjust the weights of the misclassified samples to give them more importance. This process is repeated for a predetermined number of iterations or until the error rate becomes sufficiently low.

The final model combines all the weak classifiers, weighted by their accuracy, to make a robust spam filter.

Conclusion

AdaBoost is a powerful and intuitive method for boosting the performance of weak classifiers. By iteratively focusing on the most challenging samples, it effectively creates a strong classifier from a set of weak ones, making it a valuable tool in machine learning.