Ever wondered how AI can solve complex problems almost as if it’s thinking like us?

Demystifying Chain of Thought Prompting (COT)

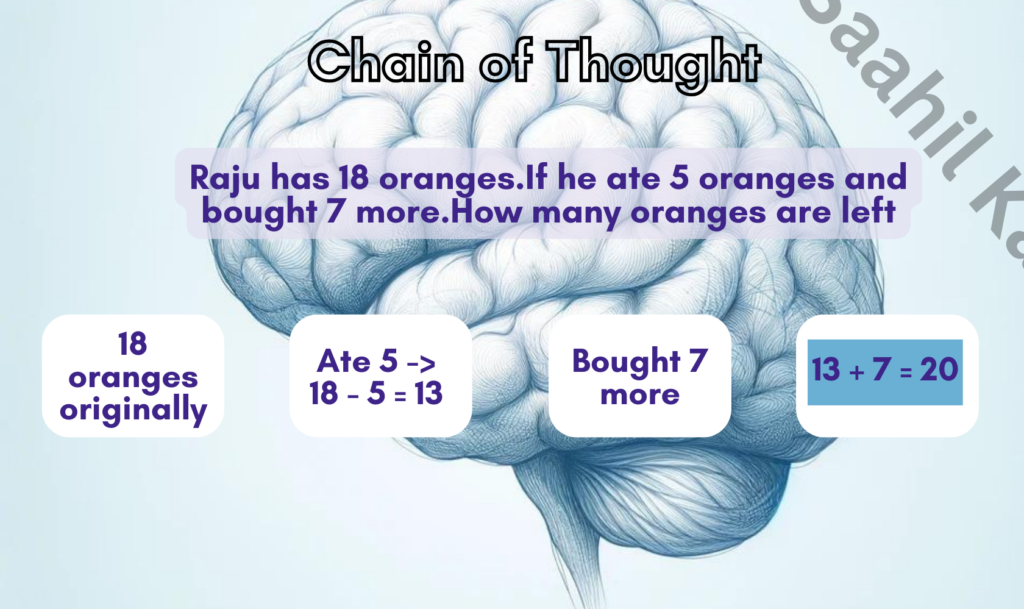

Imagine trying to solve a massive puzzle. It’s daunting if you look at the whole picture at once, right? But what if you tackle it piece by piece, step by step? That’s exactly what CoT prompting does for AI.

In simple terms, Chain of Thought prompting is like giving AI a “thought process.” Instead of expecting an AI to jump to solutions, we guide it through a series of logical steps or “thoughts.” Each step builds on the last, paving the way to the solution.

Here’s a deeper look into how CoT prompting enhances the capabilities of AI:

1. Improves Problem-Solving Skills

CoT prompting significantly boosts an LLM’s problem-solving abilities. By breaking down complex queries into manageable steps, these models can navigate through various aspects of a problem, much like a human would. This methodical breakdown allows LLMs to tackle complex mathematical problems, intricate programming challenges, and multi-faceted decision-making scenarios with greater precision and efficiency.

2. Enhances Understanding and Context Grasping

One of the challenges with traditional AI models has been their tendency to provide surface-level answers without truly understanding context or nuance. CoT prompting mitigates this by forcing the model to consider multiple angles and implications of the question before jumping to conclusions. This step-by-step reasoning ensures that the AI comprehends the deeper context, leading to more accurate and contextually appropriate responses.

3. Enables More Transparent Decision-Making

A significant advantage of CoT prompting is the transparency it brings to AI decision-making. Each “thought” or step in the reasoning process is articulated, allowing developers and users alike to trace the AI’s thought process. This transparency is crucial for trust and reliability, especially in critical applications like healthcare diagnostics or legal advice where understanding the rationale behind an AI’s decision can be as important as the decision itself.

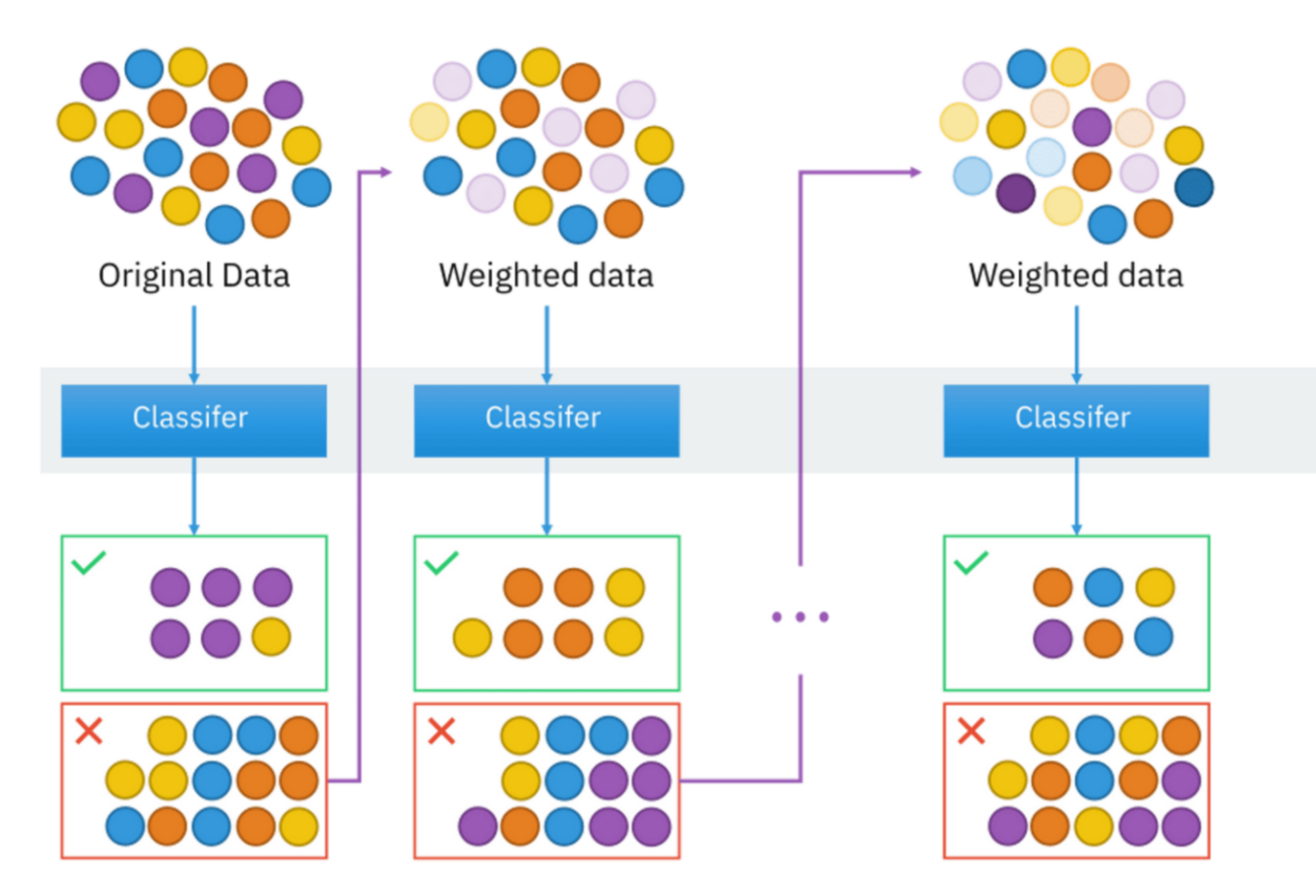

4. Facilitates Training and Feedback

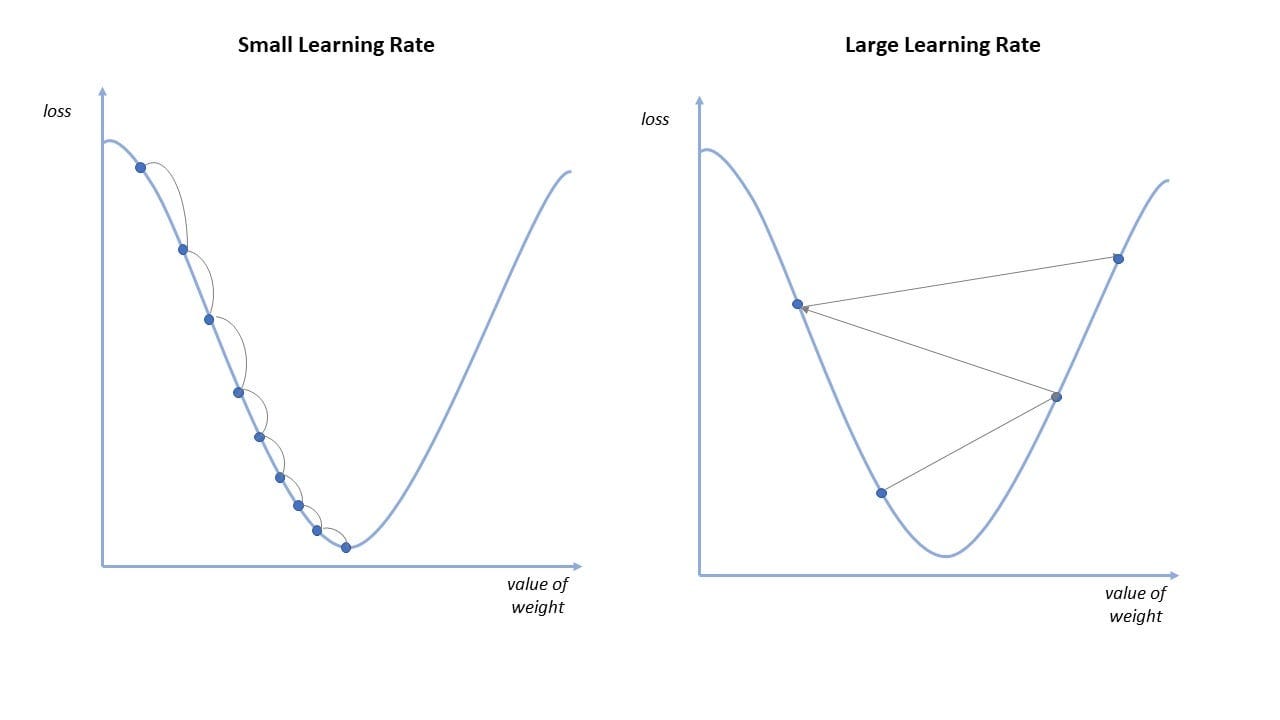

With CoT, training AI becomes more aligned with how humans teach and learn. As the model generates a step-by-step explanation of its reasoning, trainers can easily identify where the model might be going wrong and adjust the training data or parameters accordingly. This feedback loop not only refines the model’s accuracy but also its ability to generalize from specific examples to broader applications.

5. Broadens Application Scope

The detailed reasoning capability endowed by CoT prompting broadens the scope of what AI can achieve. From writing more coherent and contextually rich articles to creating detailed project plans or conducting sophisticated scientific research, the applications become nearly limitless. By teaching AI models to ‘think’ more like humans, we can leverage them in areas previously considered too complex for automated systems.

For those who want to dive deeper into the mechanics and potential of CoT prompting, check out this groundbreaking paper : https://arxiv.org/pdf/2201.11903

In conclusion, Chain of Thought prompting isn’t just a technical enhancement; it’s a paradigm shift in how we envision the capabilities of AI. By mimicking human thought processes, CoT-equipped LLMs are set to become more integral and trusted partners in a variety of fields, pushing the boundaries of what LLMS can achieve and understand.