Yoga Pose Estimation using Transfer Learning and Experiment Tracking

Yoga Pose Estimation using Transfer Learning and Experiment Tracking (using GitHub Actions)

The primary goal was to classify yoga poses using transfer learning with pre-trained models (VGG16, ResNet50, InceptionV3, and MobileNetV2) and track the experiment using MLflow for better management and evaluation of models.

Here’s a breakdown of everything I did

Importing Libraries and Configuring Paths

I started by importing essential libraries, including TensorFlow, Matplotlib, and Scikit-learn. I also imported models like VGG16, ResNet50, InceptionV3, and MobileNetV2 from TensorFlow’s Keras applications for transfer learning. I set up paths for the datasets and the list of class names.

Loading and Visualizing the Dataset

I loaded the dataset from the specified directory using tf.keras.utils.image_dataset_from_directory(). The images were labeled and categorized automatically.I visualized a subset of the images using Matplotlib to ensure that the images were loaded correctly and to see the class distribution.

Splitting the Dataset into Training, Validation, and Testing Sets

I used the train_test_split() function from Scikit-learn to divide the dataset into training, validation, and testing sets.The images were then organized into respective folders using the split_image_folder() function, which created the necessary directory structure.

Defining the Model Creation Function

I defined the create_model() function that takes a pre-trained base model (like VGG16 or ResNet50) and adds custom layers on top of it.The base model’s layers were frozen to retain pre-trained weights, and custom layers like GlobalAveragePooling2D, Dense, and Dropout were added to adapt the model for the specific classification task.

The model was compiled with the Adam optimizer, using categorical crossentropy as the loss function and metrics like Categorical Accuracy and Top-K Categorical Accuracy.

Preparing Training and Validation Datasets

I reloaded the images for training and validation using tf.keras.utils.image_dataset_from_directory() to create datasets compatible with TensorFlow’s training functions.These datasets were configured to have a batch size of 32 and an image size of 256×256 pixels.

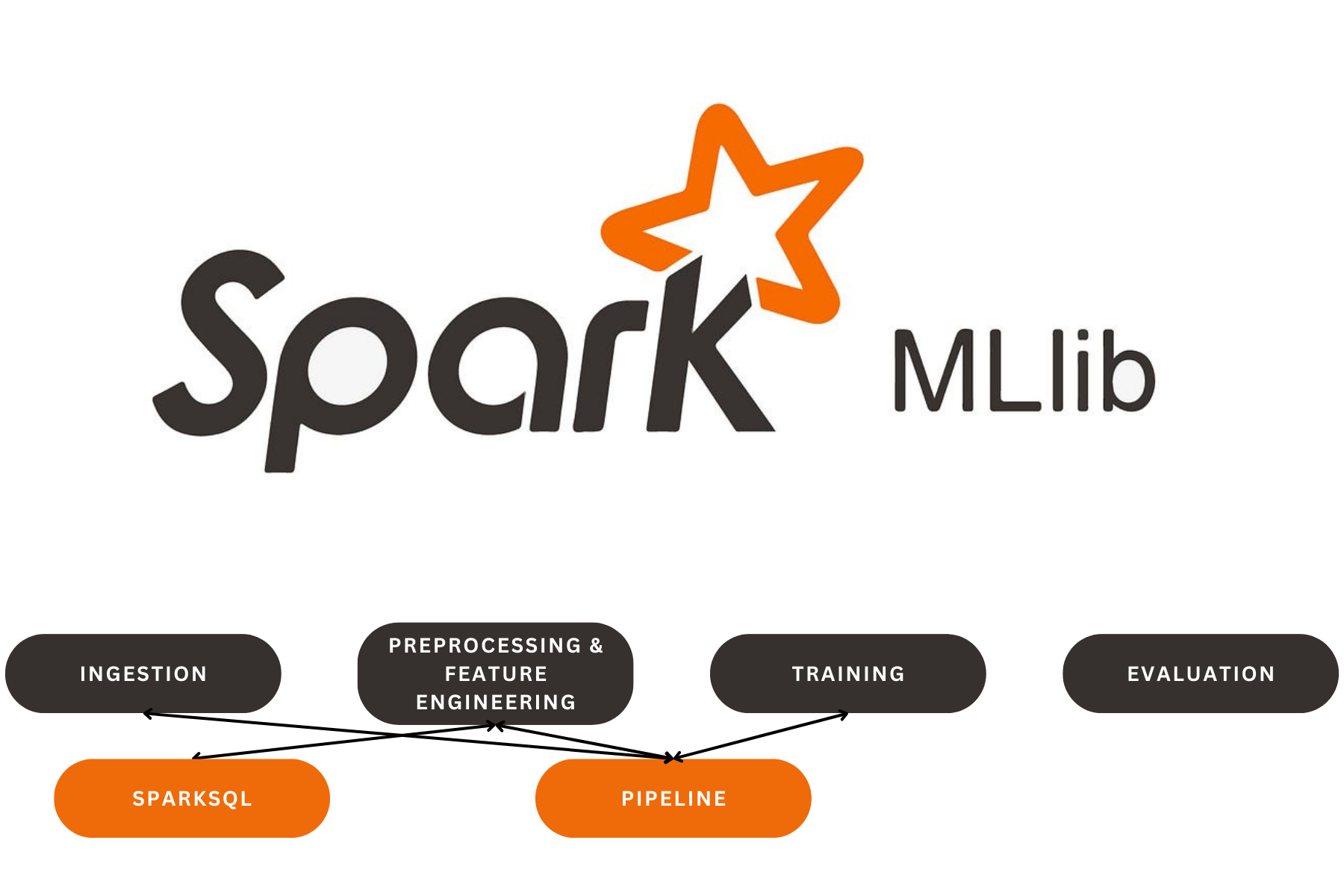

Setting Up MLflow for Experiment Tracking

I set up MLflow to track the experiments, enabling autologging for TensorFlow. This allowed automatic logging of parameters, metrics, and models during training.I defined an experiment name and started an MLflow run for each model (VGG16, ResNet50, InceptionV3, and MobileNetV2).

Training the Models

I iteratively trained each model using the model.fit() function for 10 epochs. During each training run, MLflow logged the training and validation metrics.After training, I plotted the loss and accuracy curves to visualize the model performance over the epochs.

Logging and Saving the Model

After training, I logged the final metrics (e.g., final train loss, validation loss, train accuracy, validation accuracy, and top-k categorical accuracy) using MLflow.The trained models were saved and logged into MLflow for future reference or deployment.

Loading and Using the Model for Inference

In a separate notebook, I loaded the best-performing model from MLflow using the model name and alias.I then loaded an image, preprocessed it to match the input shape, and used the model to predict the yoga pose.The predicted class was mapped back to its corresponding label and printed as the final output.

Our Latest Projects

Far far away, behind the word mountains, far from the countries Vokalia and Consonantia

About

An AI Geek and a lifelong learner, who thrives in coding and problem-solving through ML, DL, and LLMs.

Copyright ©2024 All rights reserved.