Classification of Fault Types in SteelPlates using SMOTE , XGB with W&B Tracking

Classification of Fault Types in SteelPlates using SMOTE , XGB with W&B Tracking

The primary objective of this workflow was to build a robust classification model for steel plate faults by handling imbalanced data, selecting significant features, and applying a powerful classifier like XGBoost. Each step in the process was tailored to improve the model’s performance and ensure it generalizes well to unseen data.

Let me explain how it works

Importing Libraries and Configuring Warnings

I started by importing essential libraries and configuring warnings to ignore specific categories (e.g., NumbaDeprecationWarning, ConvergenceWarning). This was done to keep the output clean from unnecessary warnings.

Loading and Exploring the Data I loaded the training and test datasets using pd.read_csv(). I inspected the data with info(), describe(), and head() methods to understand its structure and statistics. I identified and printed the numerical features and target labels.

Handling Infinite Values I replaced any infinite values with NaN to avoid issues during processing and modeling.

Visualizing the Data

- Histograms: I plotted histograms for numerical features to visualize their distributions.

- Box Plots: I created box plots of numerical features against each target label to identify any patterns or outliers.

Feature Engineering I calculated new features like X, Y, Luminosity, and Area_Perimeter_Ratio based on existing columns. I dropped the original columns that were used to create the new features.

Data Standardization I applied RobustScaler to scale the numerical features, which is particularly robust to outliers. I transformed both the training and test datasets to ensure consistency.

Splitting the Data I defined the data_split() function to split the dataset into training and testing sets using train_test_split().

Handling Imbalanced Data I implemented the oversampling() function using the SMOTE technique to handle class imbalance by oversampling the minority classes in the training set.

Feature Selection I defined the kBestFeature() function, which selects the most significant features based on SelectKBest using f_classif. Features were selected based on a threshold p-value and a minimum score.

Modeling I created a modelling() function that sets up an XGBClassifier with specific hyperparameters. The model was trained on the oversampled and selected features.

Evaluation I predicted on the test set with the trained model. I evaluated the model’s performance using various metrics like Accuracy, F1 Score, Precision, and Recall using Wandb (Weight & Biases).

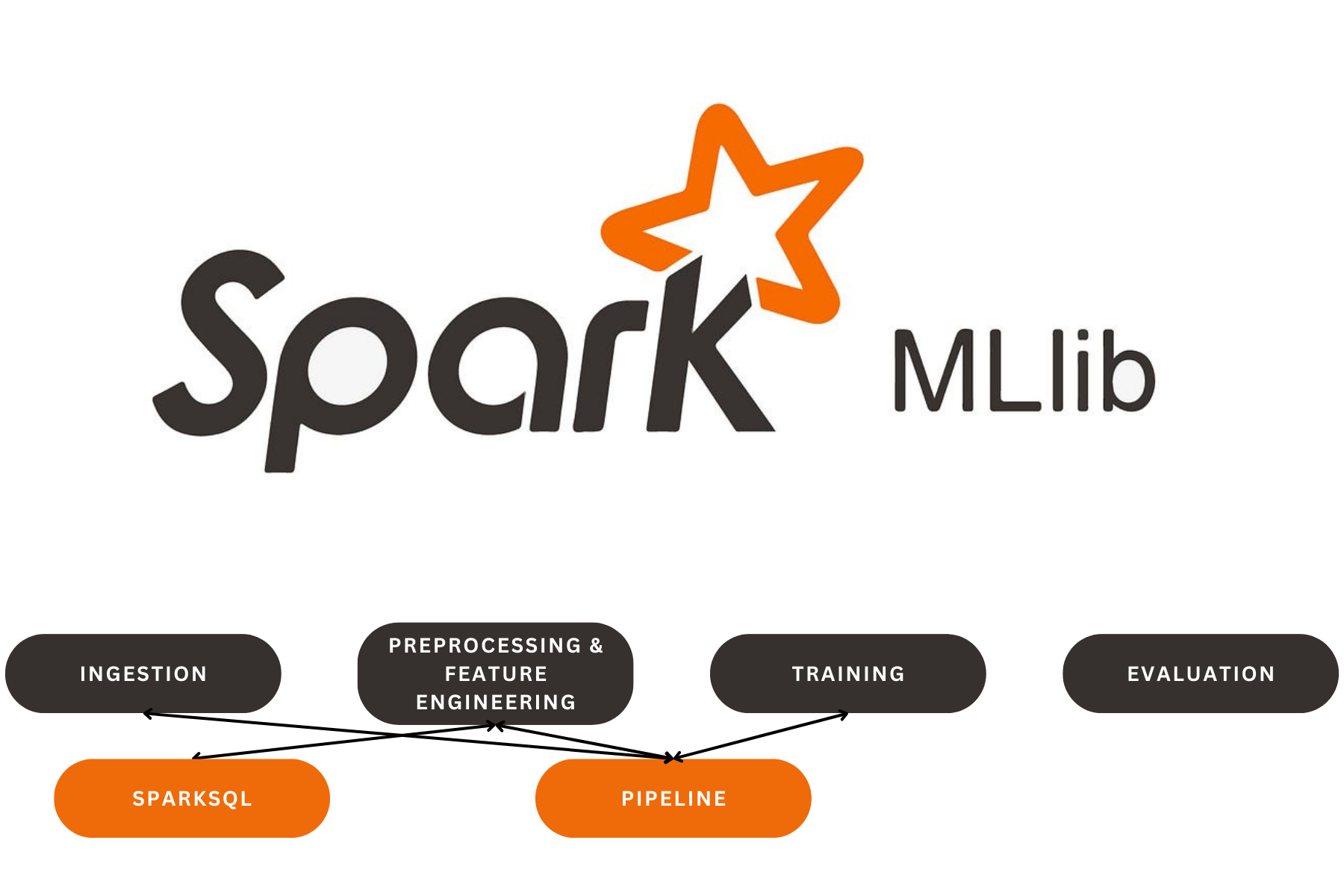

DVC Tracking & Pipeline Integration : I implemented a DVC pipeline to manage the workflow:

Our Latest Projects

Far far away, behind the word mountains, far from the countries Vokalia and Consonantia

About

An AI Geek and a lifelong learner, who thrives in coding and problem-solving through ML, DL, and LLMs.

Copyright ©2024 All rights reserved.